ServerlessArchitecture#04 AWS Lambda Cold Starts#PART-3 Just the First Request

" Meh, it’s only the first request right? So what if one request is slow, the next million requests would be fast. "

Yan Cui, the AWS Serverless Hero to write this blog (That's what he said of this quote)

Unfortunately that is an oversimplification of what happens.

Cold start happens once for each concurrent execution of your function.

To summarize, the blog Use-Cases are:

- Explaining "Cold start happens once for each concurrent execution of your function"

- Lets say User Request are Coming Sequentially - 1 out of 100, that’s bearable, for the rest 99 percentile cases latency event show up

- User requests came in droves instead!!! - Scaling the Unpredictability - What happens if we receive 100 requests with a concurrency of 10?

- When API Gateway would have to add more concurrent executions of your Lambda function and how that leads to Cold Starts

- When executions would be garbage collected

- Strategies to reduce Cold Starts

Strategy 01: Reducing the Cold Starts using Pre-warmups by assuming Spikes are predictable

Betray the ethos of serverless compute Vs Customer Satisfaction

Strategy 02: Reducing the impact of cold starts by reducing the length of cold starts :

- Right choice of Lambda Funciton Language

- higher memory setting

- Optimizing

- stay as far away from VPCs as you possibly can

Strategy 03: What if the APIs are seldom used?

Think like a Hacker: a cron job that runs every 5–10 mins and pings the API (with a special ping request)

- Don't let your own preference blinds you

NOTE-A: Busty Traffic Pattern Applications

1.1 User Request are Coming Sequentially- Man I cant believe it !!!

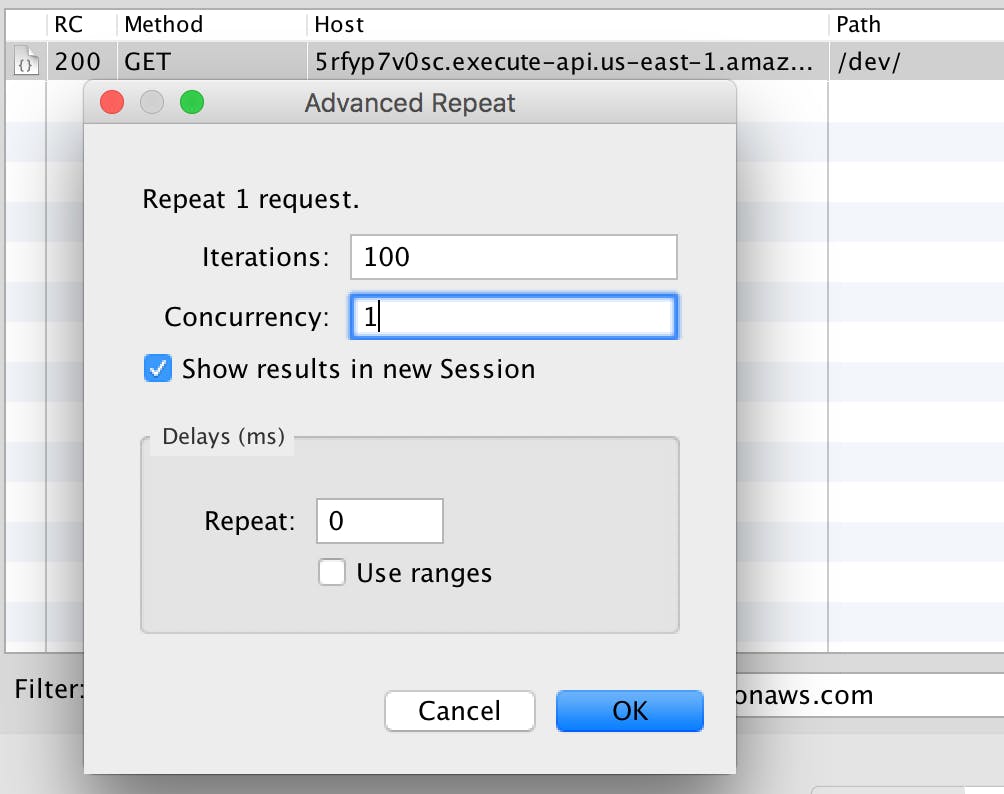

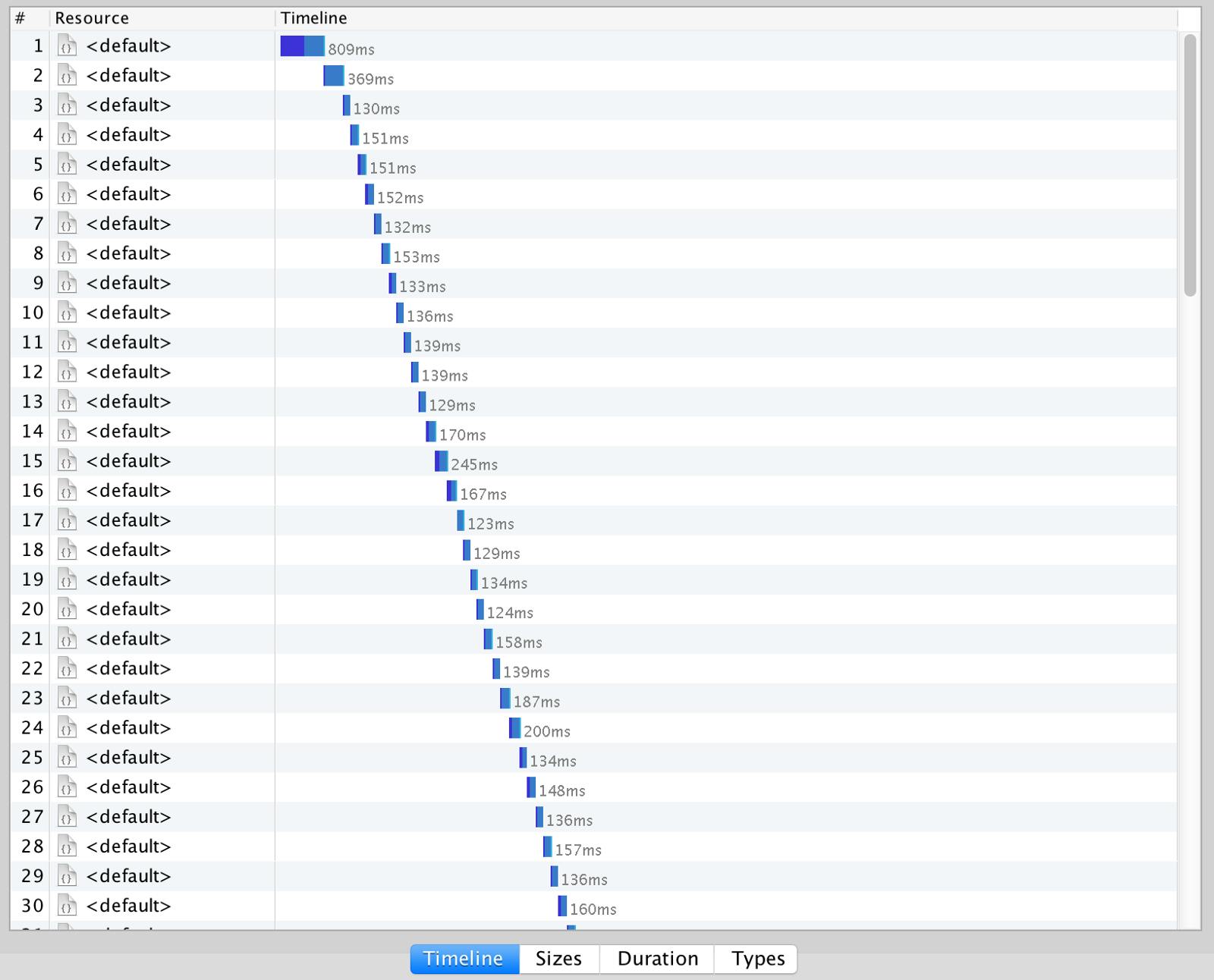

All User requests to an API in happening One-After-Another. A predictable scaling and violating the serverless ethos of scaling unpredictability. If this is the case, then you will only experience one cold start in the process (See the below illustration). Charles proxy comes with a awesome tool to captures request to an API Gateway endpoint and repeat it with a concurrency setting of 1.

As you can see in the timeline below, only the first request is experiencned a cold start and was therefore noticebly slower compare to the rest.

1 out of 100, that’s bearable, for the rest 99 percentile cases latency event show up

1.2 User requests came in droves instead!!! - Scaling the Unpredictability

Its what the serverless application design is all about. Scaling th unpredictability of user behaviors and unlikely to follow the nice sequential pattern we see above.

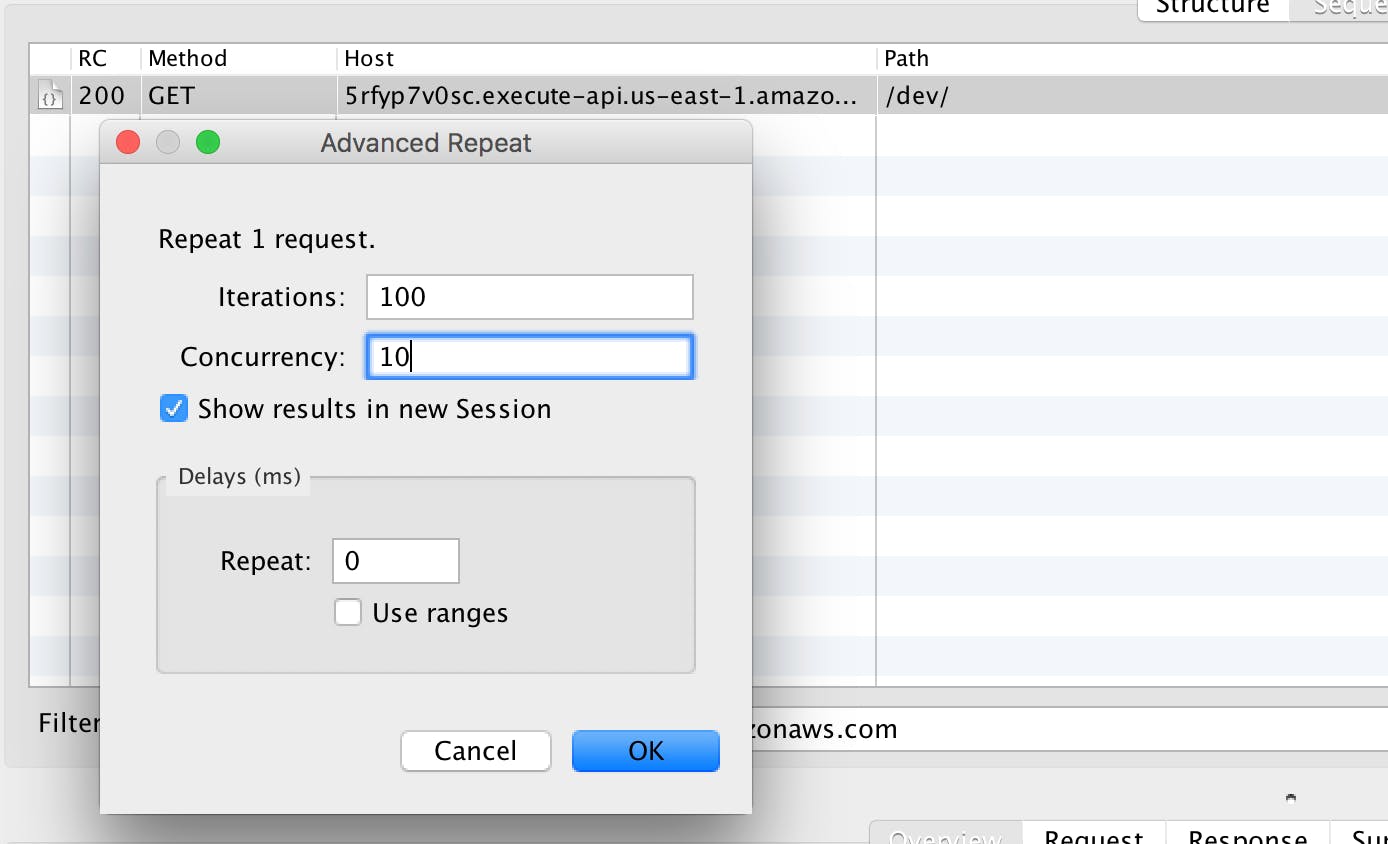

What happens if we receive 100 requests with a concurrency of 10?

See, all of a suddenly, the things are not rosy as before. What the hell is, all of my first 10 requests were cold started!! This could spell trouble if you are developing your lambda for applications having higly bursty traffic pattern around specific times of the day.

See, all of a suddenly, the things are not rosy as before. What the hell is, all of my first 10 requests were cold started!! This could spell trouble if you are developing your lambda for applications having higly bursty traffic pattern around specific times of the day.

Bustry Traffic Pattern Applications

- food ordering services (e.g. JustEat, Deliveroo) have bursts of traffic around meal times

- e-commence sites have highly concentrated bursts of traffic around popular shopping days of the year — cyber monday, black friday, etc.

- betting services have bursts of traffic around sporting events

- social networks have bursts of traffic around, well, just about any notable events happening around the world

For all of these services, the sudden bursts of traffic means API Gateway would have to add more concurrent executions of your Lambda function, and that equates to a bursts of cold starts, and that’s bad news for you.

These are the most crucial periods for your business, and precisely when you want your service to be at its best behaviour.

1.2.1 Strategies to reduce Cold Starts

A. Strategy 01: Reducing the Cold Starts using Pre-warmups by assuming Spikes are predictable

{ a violation of serverless ethos, but I would prefer Customer Satisfaction More}

If the spikes are predictable, as is the case for food ordering services, you can mitigate the effect of cold starts by pre-warming your APIs.

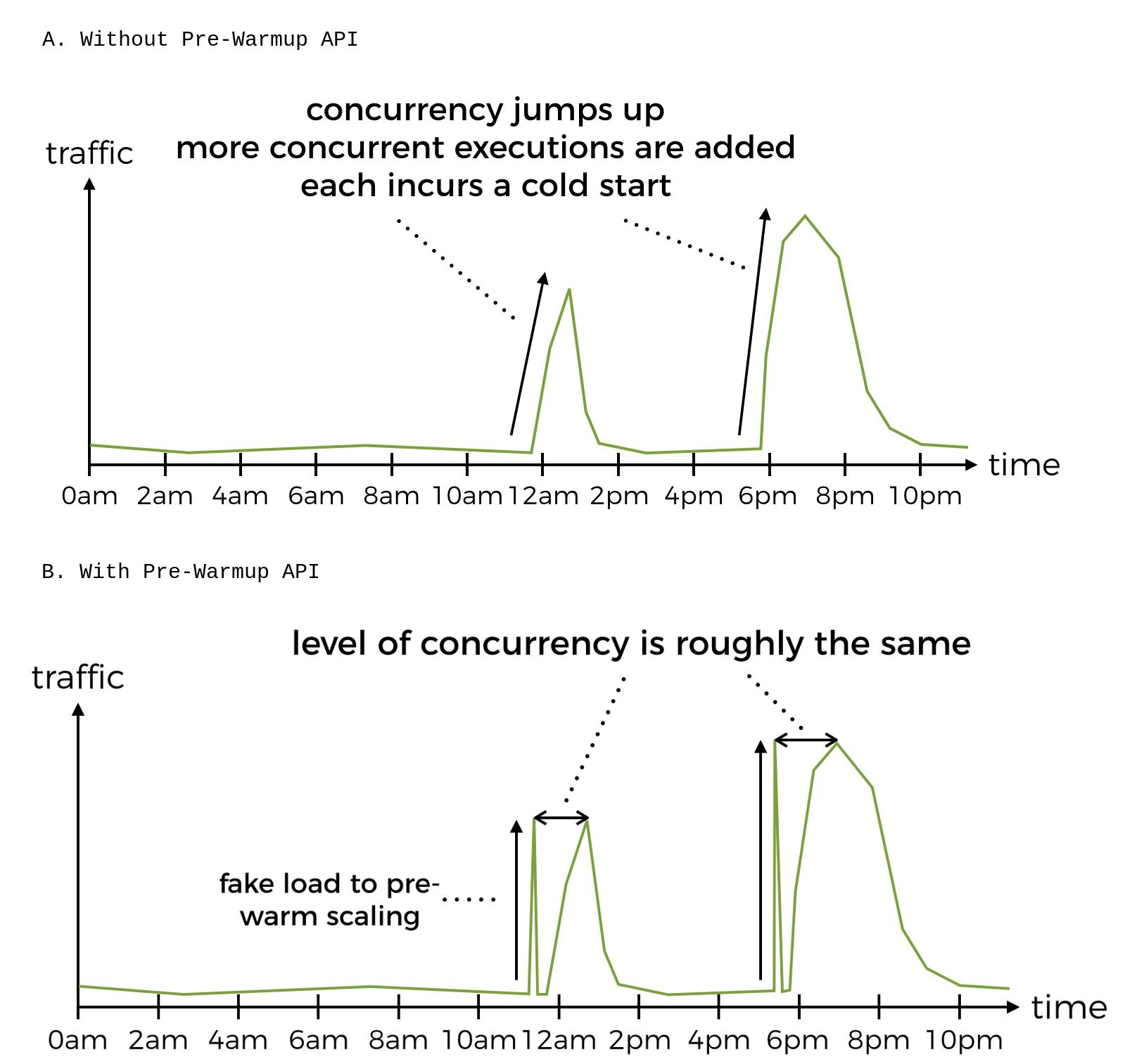

Pre-warm your API: Predictable Spikes if you know there will be a burst of traffic at noon, then

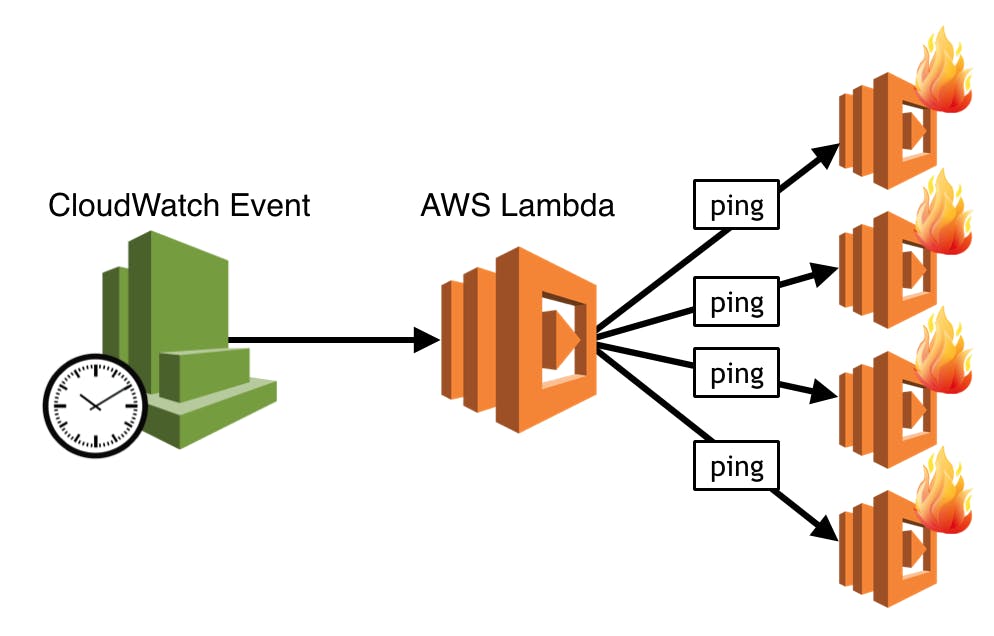

- you can schedule a cron job (aka, CloudWatch schedule + Lambda) for 11:58am that

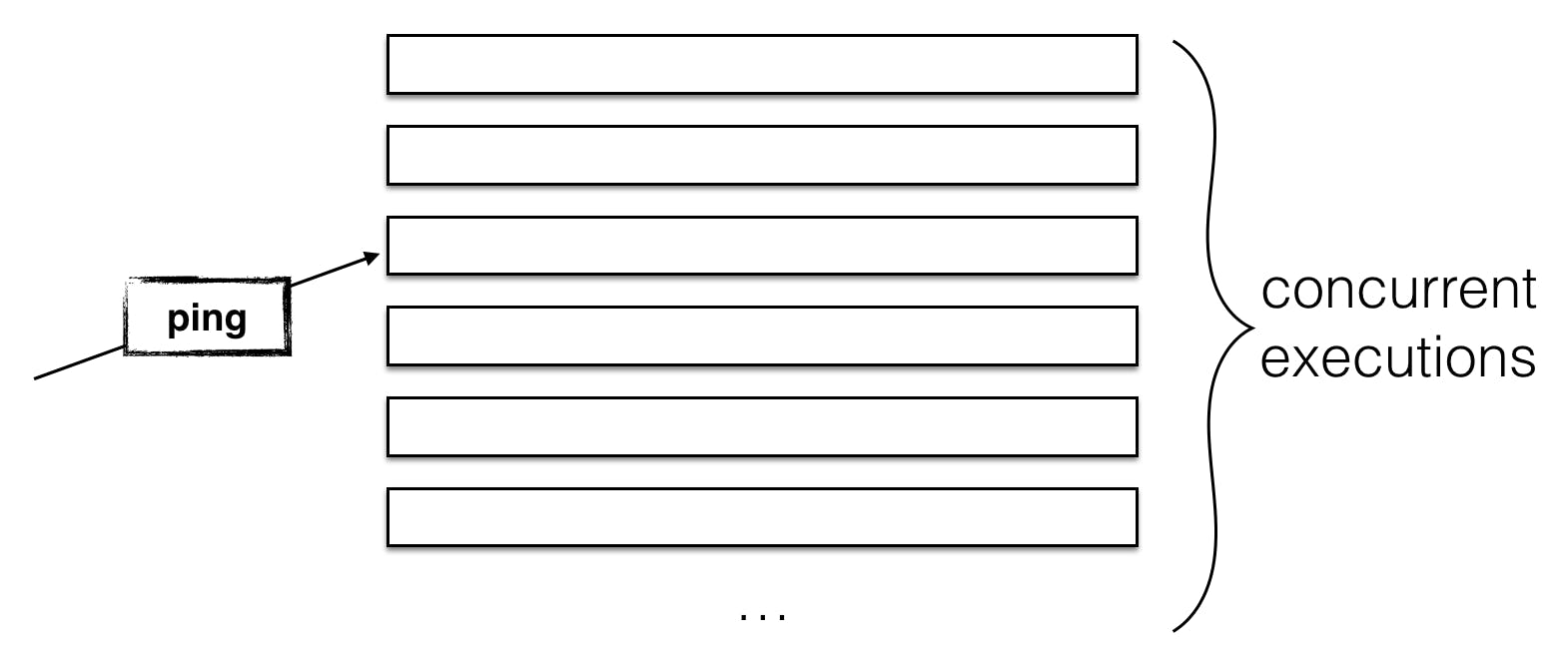

- will hit the relevant APIs with a blast of concurrent requests and

- Those will be enough to cause API Gateway to spawn sufficient no. of concurrent executions of your function(s).

With and Without Pre-warmup API

That’s great that you mitigates the impact of cold starts during these predictable bursts of traffic,

but does it not betray the ethos of serverless compute, that you shouldn’t have to worry about scaling? -- Yes.. Its a betrayal !!!

But making users happy thumps everything else, and users are not happy if they have to wait for your function to cold start before they can order their food, and the cost of switching to a competitor is low so they might not even come back the next day.

B. Strategy 02: Reducing the impact of cold starts by reducing the length of cold starts

- by authoring your Lambda functions in a language that doesn’t incur a high cold start time — i.e. Python, Node.js or Go

- choose a higher memory setting for functions on the critical path of handling user requests (i.e. anything that the user would have to wait for a response from, including intermediate APIs)

- optimizing your function’s dependencies, and package size

- stay as far away from VPCs as you possibly can! VPC access requires Lambda to create ENIs (elastic network interface) to the target VPC and that easily adds 10s (yeah, you’re reading it right) to your cold start

Factors To Consider

- executions that are idle for a while would be garbage collected

- executions that have been active for a while (somewhere between 4 and 7 hours) would be garbage collected too

C. Strategy 03: What if the APIs are seldom used?

It’s actually quite likely that every time someone hits that API they will incur a cold start, so to your users, that API is always slow and they become even less likely to use it in the future, which is a vicious cycle.

For these APIs, you can have to do the followings:

- a cron job that runs every 5–10 mins and pings the API (with a special ping request)

- so that by the time the API is used by a real user it’ll hopefully be warm and the user would not be the one to have to endure the cold start time.

PING @regular interval - Not Effective for busy Functions

This method for pinging API endpoints to keep them warm is less effective for “busy” functions with lots of concurrent executions

- your ping message would only reach one of the concurrent executions and

- if the level of concurrent user requests drop then some of the concurrent executions would be garbage collected after some period of idleness, and that is what you want (don’t pay for resources you don’t need).

To Conclude I want to mention Yan Cui, {dont think this post to be your one-stop guide to AWS Lambda cold starts.}