ServerlessArchitecture#04 AWS Lambda Cold Starts#PART-5 Lets See Why Your Lambda Throttled

Have you noticed recently that your AWS Lambda invocation requests are getting throttled? If so, your Lambda functions are probably not running as designed. Let’s examine the possible causes of and solutions to poor Lambda performance.

To summarize, the blog Use-Cases are:

- Have you recently noticed your lambda functions are getting throttled?

- Note that, Lambda has a default unreserved concurrency limit = 1000 (per region)

- From Now, never say, you dont know what is 429!!!

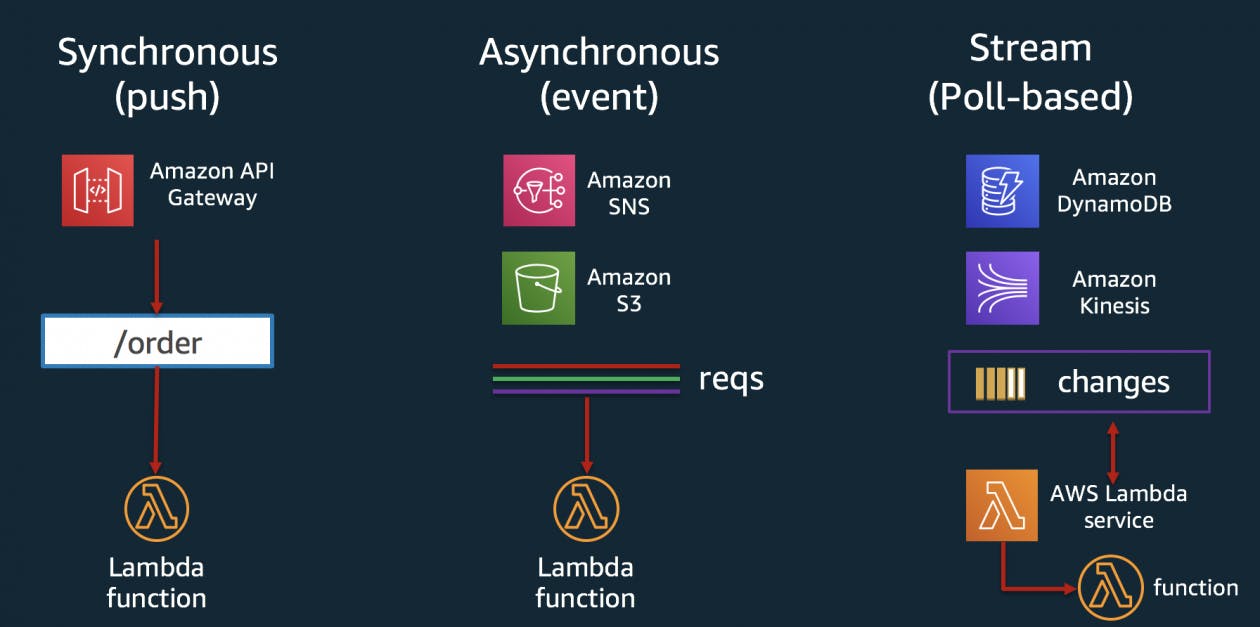

- Lambda Invocation Methods: Synchronous invocations, Asynchronous invocations and Polling, stream-based invocations

- Lambda Invocation Model Vs Error Behavior

- How to configure AWS Lambda limits?

- What is a function-level concurrency limit configuration?

- Troubleshooting a function with function-level allocation

- Troubleshooting a function using unreserved concurrency

- Problem Identified at AWS Lambda Throttling; How to resolve it?

- The END Twist : Your Lambda function might execute twice. Be prepared!

Always Remember: Functions with allocated concurrency can’t access unreserved concurrency.

NOTES:

A. A Lambda-To-Lambda Call !!! Is it a Bad Practice?

B. A Recall For You: How Lambda Works

C. At least 100 unreserved concurrency

D. How to increase your AWS Lambda concurrency limits

In AWS Lambda, a concurrency limit determines how many function invocations can run simultaneously in one region. Each region in your AWS account has a Lambda concurrency limit. The limit applies to all functions in the same region and is set to 1000 by default.

If you exceed a concurrency limit, Lambda starts throttling the offending functions by rejecting requests. Depending on the invocation type, you’ll run into the following situations:

| Invocation Type | Details |

| Synchronous invocations | - Invocation sources: API Gateway, Cloudfront, On demand - AWS returns a 429 status code. - The request is not retried. |

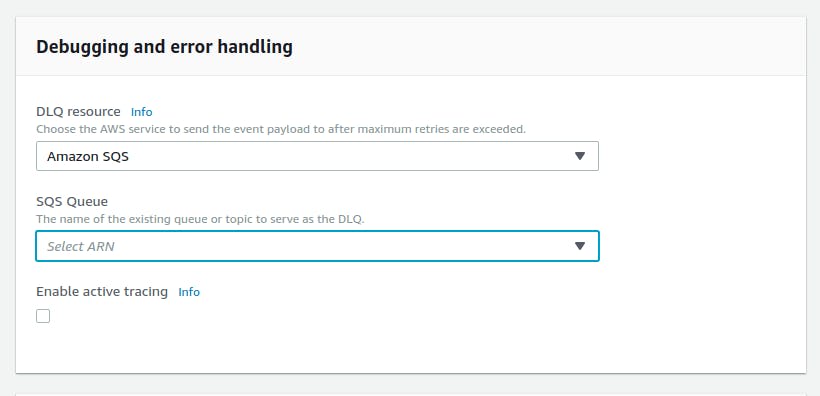

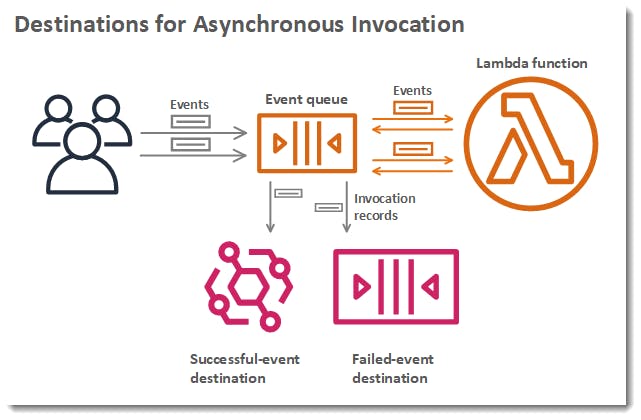

| Asynchronous invocations | - AWS retries the invocation twice. - If both attempts are unsuccessful, the request is not retried. - If you configure a dead letter queue (You can configure a dead letter queue in the console for the function), the request gets sent there.  |

| Polling, stream-based invocations | A. Invocation sources: DynamoDB, Kinesis AWS retries the invocation until the retention period expires. B. Invocation sources: SQS AWS Lambda reattempts the connection until the queue’s conditions for message deletion are met. |

Now let's examine this Lambda Invocation Model Vs Error Behavior

Info Fetched from earlier Table

| Invocation Model | Error Behavior |

| Synchronous | None |

| Asynchronous | Built in 2x retry |

| Poll Based | Retry based on data expiration |

You wanna see a illustration of whole operations !!!

Lets See another Illustration

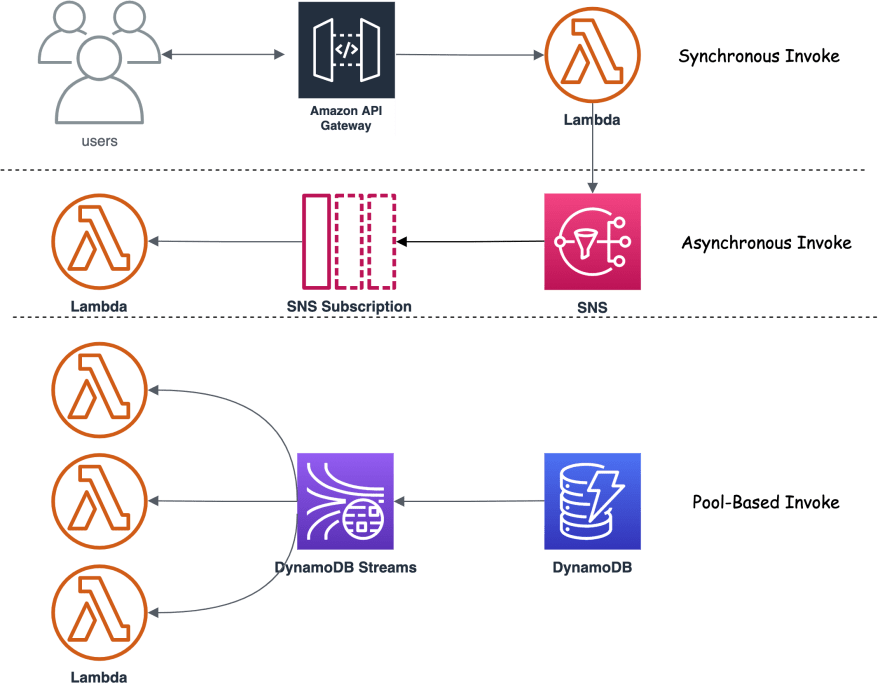

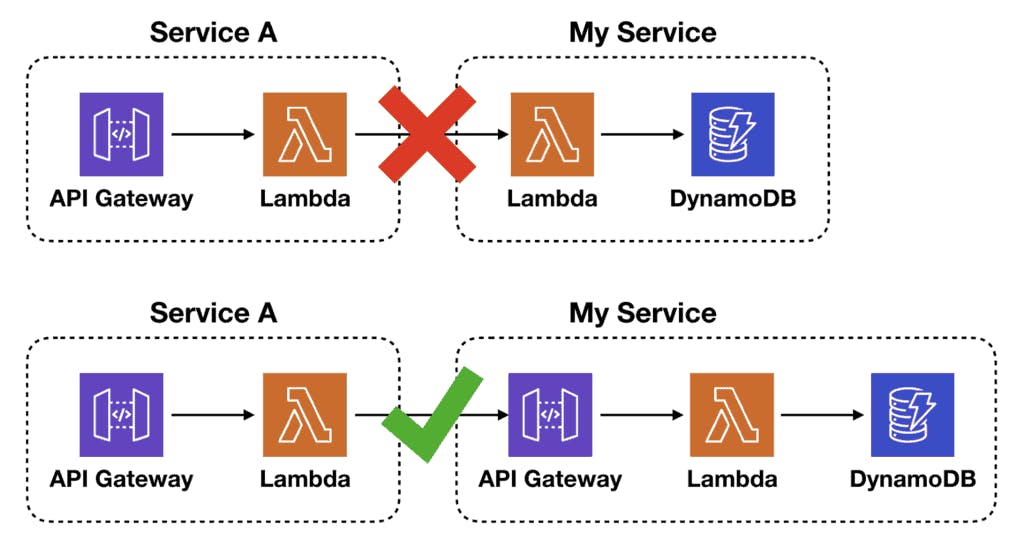

NOTE A: What do you see in second illustration? A Lambda-To-Lambda Call Via SNS!!! Is it a Bad Practice?

Lets start with this example below:

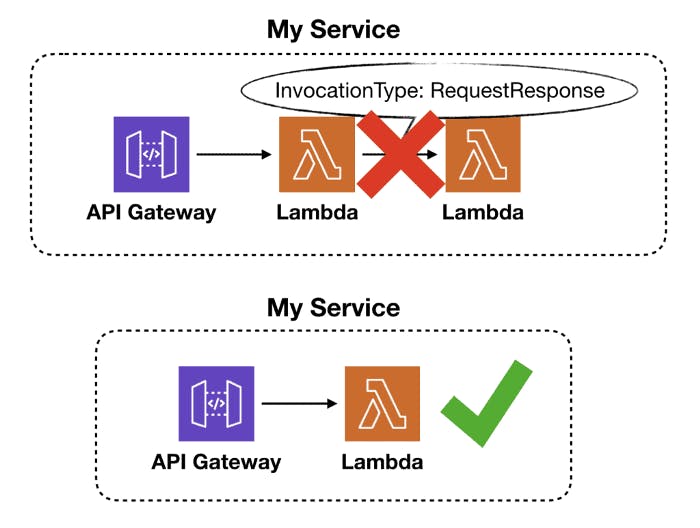

Recommendation 01: Never Make a direct lambda-to-lambda call (If its a Request-Response Then Its very bad).

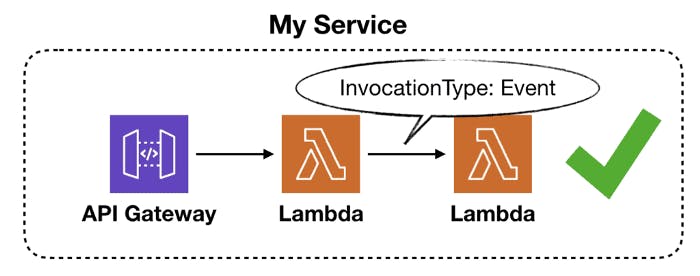

Recommendation 02: Direct Lambda-to-lambda call is only valuable if its an "EVENT" not "Request-Response"

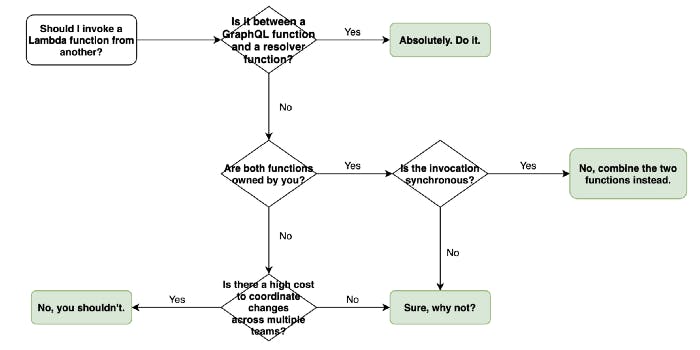

So, to answer what decision criterion the above recommendations are based on? — it depends! And to help you decide, here’s a decision tree for when you should considering calling a Lambda function directly from another.

Whatever the invocation source, repeated throttling by AWS Lambda can result in your requests never actually being run—showing up in production as a bug.

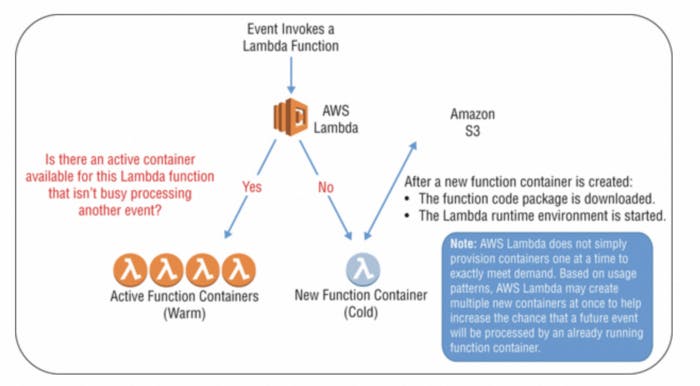

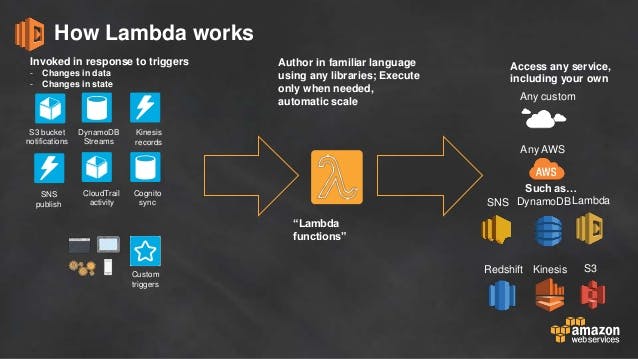

NOTE B: A Recall For You: How Lambda Works

B1. Synchronous Invocation Example

B2. Asynchronous Invocation Example

B3. Poll-Based Invocation Example

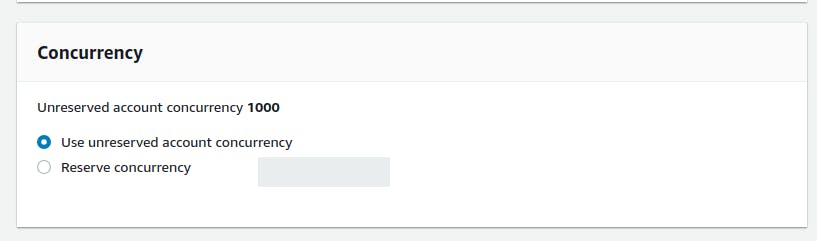

1.1 How to configure AWS Lambda limits?

How you should change the default concurrency limit will depend on how your Lambda functions are configured. We’ll go over both configuration options: allocating your concurrency limit by function (function-level concurrency limit configuration) or jump down to troubleshooting the unreserved concurrency limit.

A. What is a function-level concurrency limit configuration?

A function-level concurrency limit is exactly what it sounds like, a reserved number of concurrent executions set aside for a specified function. It’s a configuration option that AWS Lambda makes available to you through the console.

Here’s an example to illustrate how function-level allocations work:

- If your account limit is 1000 and

- you reserved 200 concurrent executions for a specific function and

- 100 concurrent executions for another, then

- the rest of the functions in that region will share the remaining 700 executions.

If you haven’t configured limits for any Lambda functions, jump to unreserved allocation troubleshooting.

NOTE C: At least 100 unreserved concurrency

AWS Lambda limits the total amount of concurrency you can reserve across all functions in one region. Lambda requires at least 100 unreserved concurrent executions per account. If you are bumping up against your account limit, you can generate a ticket to AWS to increase your account limit.

B. Troubleshooting a function with function-level allocation

If you reserve concurrent executions for a specific function, AWS Lambda assumes that you know how many to reserve to avoid performance issues.

Functions with allocated concurrency can’t access unreserved concurrency.

If your function is being throttled, you can raise the concurrency allocated to the function.

When you plan to increase the concurrency limit for a function, do the following check:

Check 01: Functions are closer to it unreserved concurrency limit

Check to see if the functions sharing the unreserved concurrency allocation are getting close to that limit (as increasing reserved concurrency will lower the amount of available unreserved concurrency.)

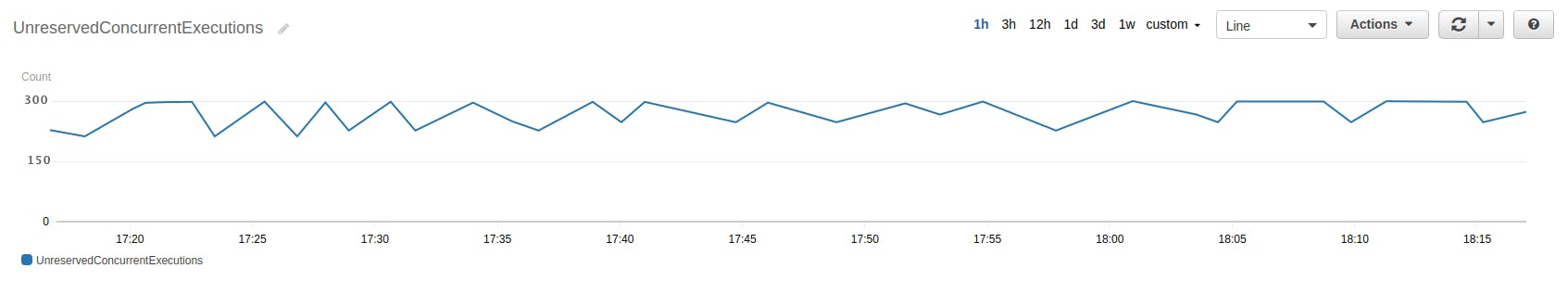

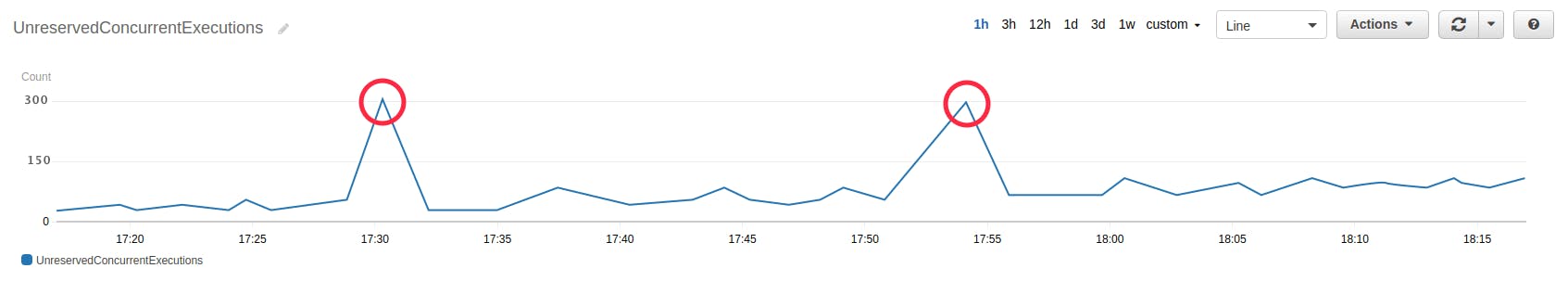

How to Perform this check? You perform the check by accessing CloudWatch’s UnreservedConcurrentExecutions metric in the AWS/Lambda namespace.

Check 02: What if, you don’t have unreserved concurrency to spare

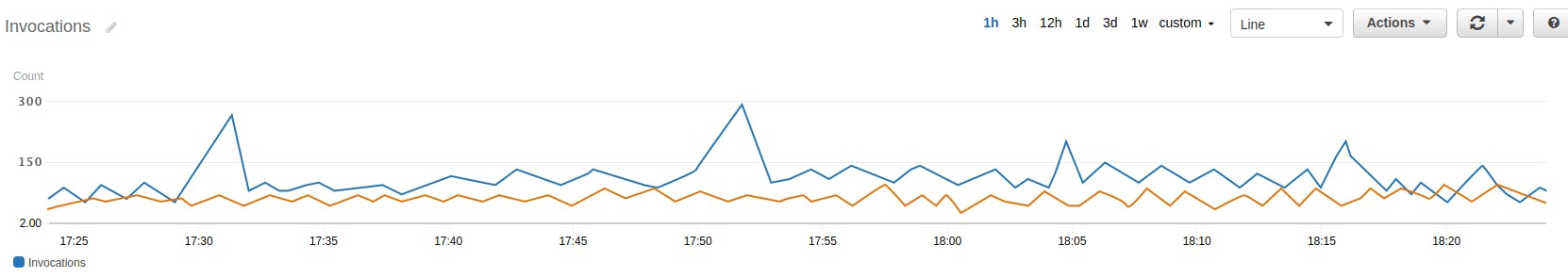

- If you don’t have unreserved concurrency to spare, compare the reserved amount for each other function with the values for the Invocations metric in CloudWatch.

- If the function’s invocations never approaches its reserved concurrency, it’s overallocated, and you can lower your reservation.

This will give you enough room to increase the allocation for the function you’re troubleshooting.

C. Troubleshooting a function using unreserved concurrency

If your function is drawing from the pool of unreserved concurrent executions, determine what is using up your unreserved concurrency allocation.

To do so, try the below steps Man.....

- access the UnreservedConcurrentExecutions metric in CloudWatch to determine if you are consistently (or at regular intervals during bursty workloads) bumping up against your unreserved concurrency limit.

- If you are nearing that limit, you can increase unreserved concurrency by reducing function-level limits (reserved concurrency), or request a higher account concurrency limit.

Scenario 01: The unreserved concurrency pool is being exhausted consistently.

Scenario 02: Occasional bursts in invocations suggest one of your functions has a bursty workload.

Scenario 03: Bursty function that is periodically spiking

If Else, look for a bursty function that is periodically spiking and using up the unreserved concurrency pool. Check the Invocations metric for each function in CloudWatch and find your bursty function.

In this graph, the function graphed in blue is bursting occasionally and using up the unreserved concurrency pool.

In this graph, the function graphed in blue is bursting occasionally and using up the unreserved concurrency pool.

1.2 Problem Identified at AWS Lambda Throttling; How to resolve it?

Once you identify a bursty function, you can start by looking at the upstream event source; if that search is unfruitful, you’ll want to do some function-level allocation housekeeping. You have a couple options:

- Identify the functions that absolutely cannot be throttled, and reserve concurrency for them.

- Reserve a portion of your concurrency limit for your bursty function that will guarantee your other functions can still use the remaining unreserved capacity and not be throttled. This will result in the bursty function being throttled when it would otherwise spike.

NOTE D : How to increase your AWS Lambda concurrency limits

Open a support ticket with AWS to request an increase in your account level concurrency limit.

- Create a new support case

- Set Regarding value to Service Limit Increase.

- Choose Lambda as the Limit Type.

- Fill out the body of the form.

- Wait for AWS to respond to your request.

They can increase your limit so you will be able to run more Lambda functions concurrently.

The END Twist : Your Lambda function might execute twice. Be prepared!

Are you confused when scheduled Lambdas execute twice, SNS messages trigger an invocation three times, your handmade S3 inventory is out of sync because events occurred twice?

Bad news: Sooner or later, your Lambda function will be invoked multiple times. You have to be prepared! The reasons are retries on errors and event sources that guarantee at-least-once delivery (e.g., CloudWatch Events, SNS, …). I will discuss this in another Blog!! For now, Cheer Up...!!!