ServerlessArchitecture#10 - AWS Optimization Best Practice #Part-01: Optimized Dependency Usage

AWS Optimization Best Practices

| Optimization Best Practice | Action |

| Avoid monolithic functions | - Reduce deployment package size - Micro/Nano services |

| Minify/uglify production code | - Browserify/Webpack |

| Optimize dependencies (and imports) | - up to 120ms faster with Node.js SDK |

| Lazy initialization of shared libs/objs | - Helps if multiple functions per file |

To summarize, the blog Use-Cases are:

- AWS Optimization Best Practices

- Opimizing the Dependency Usage

- How to control the Dependencies using deployment package

- Trim the dependencies: 50MB (50 MB (zipped, for direct upload))/250MB (unzipped, including layers)/3MB (console editor)

- Note: Functions with many dependencies are 5-10 times slower to start.

- My Story with a client to slimming down Lambda deployment zips

- Must fail fast: dont let your lambda to spin helplessly waiting

- Optimized Dependency Usage (Node.js SDK & X-Ray): Results in several N ms faster

1.1 Optimized Dependency Usage

A. Control Dependencies

The Lambda execution environment contains many libraries such as the AWS SDK for the Node.js and Python runtimes. (For a full list, see the Lambda Execution Environment and Available Libraries.)

To enable the latest set of features and security updates, Lambda periodically updates these libraries. These updates can introduce subtle changes to the behavior of your Lambda function.

To have full control of the dependencies your function uses, we recommend packaging all your dependencies with your deployment package.

B. Trim Dependencies

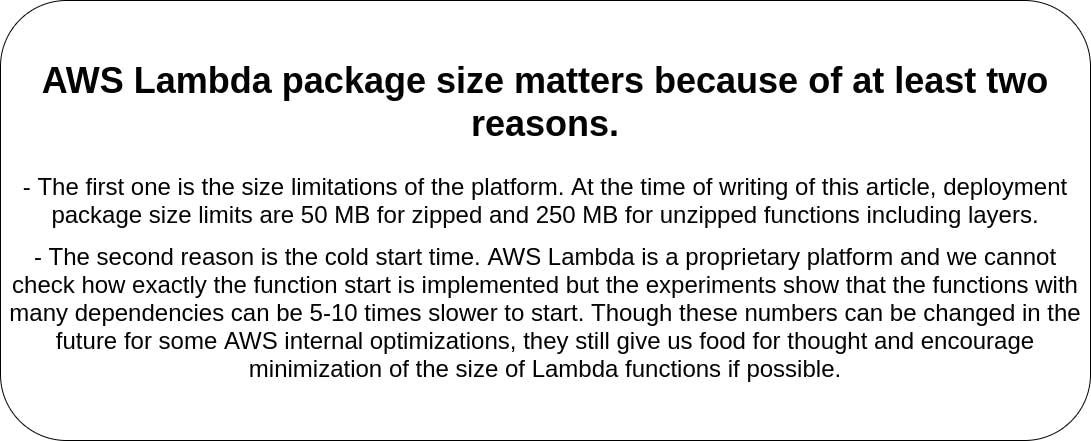

Lambda function code packages are permitted to be at most 50 MB when compressed and 250 MB when extracted in the runtime environment.

- If you are including large dependency artifacts with your function code, you may need to trim the dependencies included to just the runtime essentials.

This also allows your Lambda function code to be downloaded and installed in the runtime environment more quickly for cold starts.

Do you want to know more about how to reduce your lambda package size when uploading a Lambda function? Then read this blog below. The gist of the mentioned blog is:

(a) It has explained how to minimize the size of AWS Lambda functions in TypeScript.

(b) It also has referred Webpack. Webpack is a very powerful tool with many different configuration options that can help you to tune the bundle according to your needs, for instance, to add source maps or improve build process speed using the caching mechanism.

Whatever the approach, we must always remember the limits of Lambda package size:

Deployment package size

- 50 MB (zipped, for direct upload)

- 250 MB (unzipped, including layers)

- 3 MB (console editor)

My Story: Slimming Down Lambda Deployment Zips

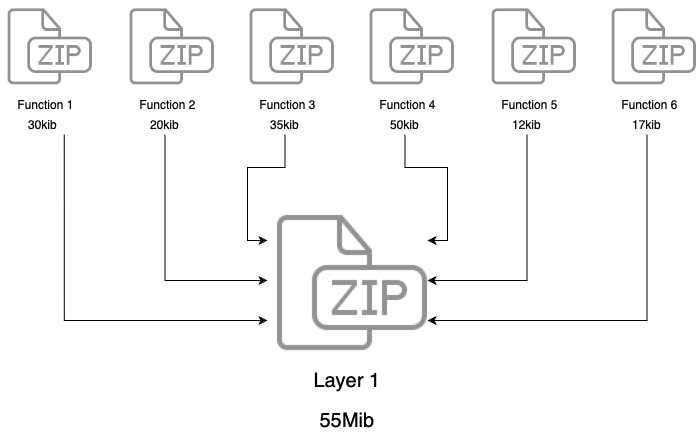

More on that eventually, but in the meantime I wanted to share a technique I’ve had to use to reduce the size of zips that are used to deploy code to Lambda, since they’re limited to 50MB.

The raster portion of the Lambda Tiler is a Python app that has some binary dependencies that aren’t included in the Lambda runtime: numpy, GDAL, rasterio, and PIL. Fortunately, all of these are distributed as wheels built with manylinux, so OS dependencies aren’t immediately necessary.

Unfortunately, the combined size of the libraries and all of the .pyfiles exceeds 50MB, even when zipped as tightly as possible (by Apex, which is a fantastic tool for Lambda deployment).

Then I had an epiphany.

I have borrowed a technique used to determine code coverage, where files are instrumented prior to executing tests and then post-processed to determine which lines were executed, I created a Docker image that I could use to run the Flask app directly.

After the image finished building, I shelled into it using docker run and created a placeholder file (start). I started the Flask version of the tiler (python app.py), manually triggered requests that I knew would exercise all of the functionality (like a test), then stopped the app.

Next, I used find to generate a list of files that were accessed while the app was running (i.e. had an atime more recent than start): find /tmp/virtualenv/lib/python2.7/site-packages -type f -anewer start

This included only the shared libraries my app needed and omitted things like test data, documentation, and other files that would bloat the zip without being necessary. Using this as a whitelist reduced the size of the deployment zip to 34MB, putting me well under the limit and providing overhead for future module-provided functionality.

In summary, if you’re building shared libraries yourself, strip them after building (some include an install-stripMake target for this purpose) and use code coverage techniques (find -answer ) to facilitate packaging only the parts of dependencies that are actually used. (This same approach is as valuable, if not more, when paring down node_modules, as many libraries include much more than is actually necessary.)

NOTE: Conveniently, Michael Hart has built a Docker image that is a pretty close simulacrum to what AWS is running (Amazon Linux + additional runtime pieces) and that can be used locally as part of a build process. Find the Docker Image Here.

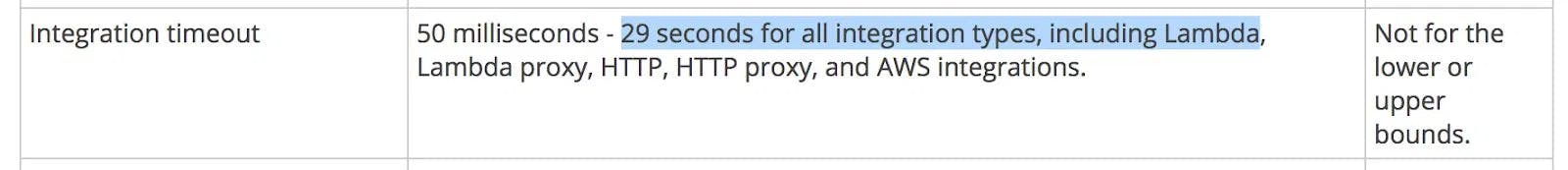

C. Fail Fast

Configure reasonably short timeouts for any external dependencies, as well as a reasonably short overall Lambda function timeout.

- Don’t allow your function to spin helplessly while waiting for a dependency to respond.

Because Lambda is billed based on the duration of your function execution, you don’t want to incur higher charges than necessary when your function dependencies are unresponsive.

Unlike invocation errors, function errors don't cause Lambda to return a 400-series or 500-series status code. If the function returns an error, Lambda indicates this by including a header named X-Amz-Function-Error, and a JSON-formatted response with the error message and other details.

Refresher:

Depending on the event source you’re using with Lambda, there are also other limits to consider. For example, Amazon API Gateway has a hard limit of 29 seconds for integration timeout.

This means that even if your function can run for five minutes, API Gateway would have timed. It would take 29 seconds. It would have returned a 500 error to the caller. Knowing these best practices can prevent future issues.

D. Optimized Dependency Usage (Node.js SDK & X-Ray)

// const AWS = require('aws-sdk’)

const DynamoDB = require('aws-sdk/clients/dynamodb’) // **125ms faster**

// const AWSXRay = require('aws-xray-sdk’)

const AWSXRay = require('aws-xray-sdk-core’) // **5ms faster**

// const AWS = AWSXRay.captureAWS(require('aws-sdk’))

const dynamodb = new DynamoDB.DocumentClient()

AWSXRay.captureAWSClient(dynamodb.service) // **140ms faster**